SLAM project

Franceschini, Mouroum, Tadiello, Major

29/02/2019

Overview

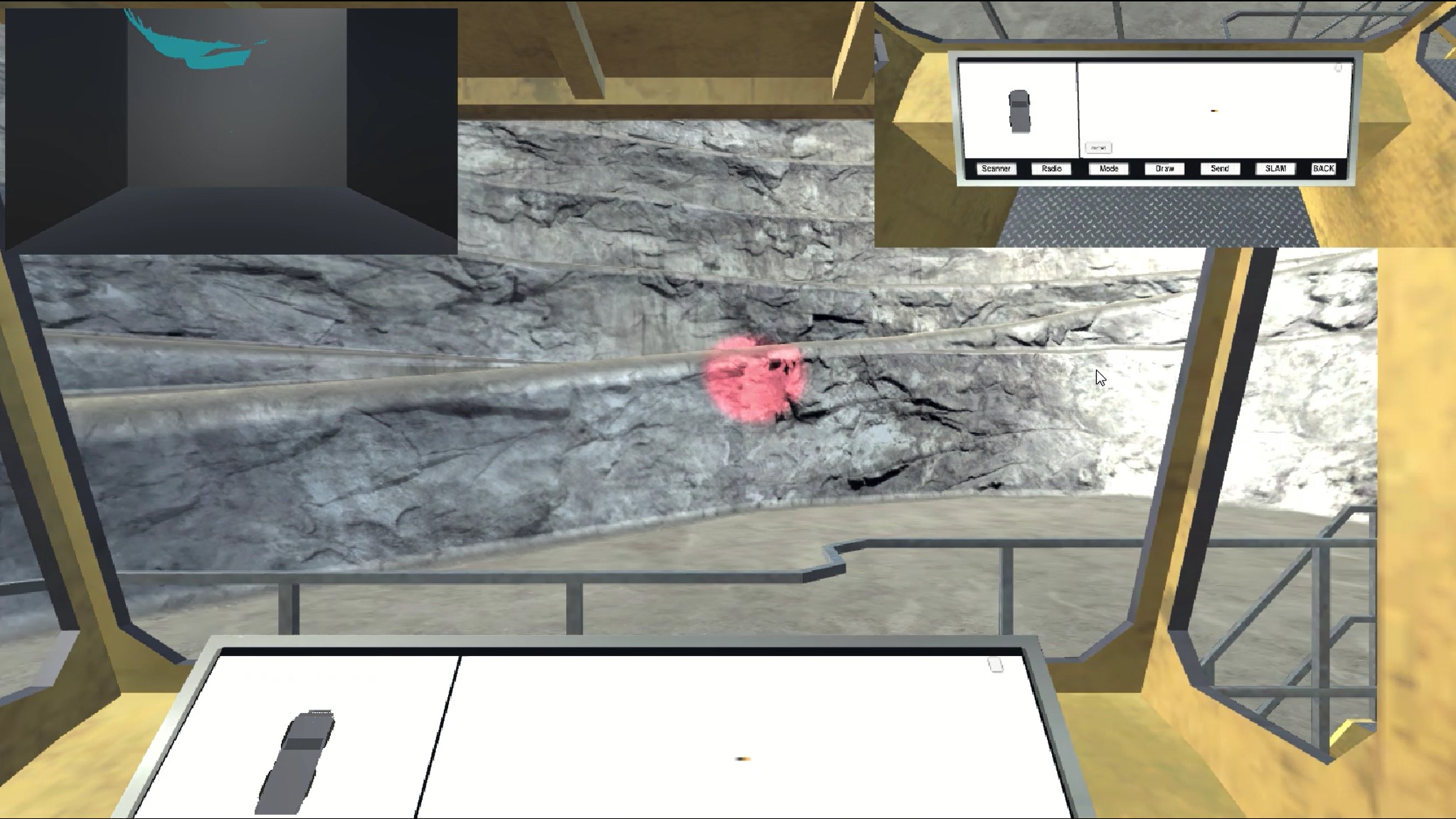

With increasing popularity of autonomous systems and advancements in key technologies, such as robotics and data processing, the SLAM (Simultaneous Localization And Mapping) algorithm is gaining more importance. This project aims to teach students about SLAM. In particular it helps to illustrate in an intuitive way how the Iterative Closest Point (ICP) algorithm works. Students can explore a 3D mine environment inside a vehicle equipped with SLAM technology. When modifying the parameter values, the player receives a real time feedback on the performance of the ICP algorithm. By exploring the different parameter combinations, students get an intuition about the complex dependencies of the SLAM algorithm.

Cloud point of view

Cloud point of view

Set parameters

Unity

The unity engine was used for load the point cloud (PC) of the mine and the ToF camera simulator attached to a mine truck. Every point cloud captured by the ToF camera was send to the PCL process in order to make the map. Meanwhile unity continuously receiving the refreshed map from PCL. The points of the latest map are ploted in a black room.

ICP

The code for the ICP was implemented using the Point Cloud library. Players can switch between the brute force ICP and ICP with normals. In order to obtain maximum performance the code runs in 4 thread separately:

-

create the PC object from the message that comes from unity and push the object in a priority queue.

-

read from the priority queue and reconstruct the separated clouds with the registration process using the ICP algorithm. Push the result in another queue.

-

read from the queue with the point generated and transform to a message in ASCII format.

-

handle the settings that come from Unity.

Communication

For the communication we use Zero-Mq that allow us to establish three different publish and subscribe communication fig 2.

A brief schema of how the communication between Unity and PCL works.

ToF camera simulator improvements

To make the ToF camera more truthful have been improved some aspects of the simulator. The given camera simulate a perfect device with no noise and with optimum characteristics, so have been added some features giving to it a more realistic behaviour:

- Black surfaces: given the RGB color of the surface that the sensor is trying to reach, if the color is under a certain threshold, the points are not captured, i.e. when the material’s color is very similar to black the points can’t be captured.

- Distance noise: given the distance of the point to reach we add a noise given from a Gaussian distribution with variance growing with the distance. The variance grows in correspondence of a linear function with an inclination given from real ToF cameras tests (1 cm every 5.8 meters), i.e. the noise is higher when the point to reach is farther. At the end the error given by the distribution is added to the distance measured.

- Reflective noise: for each point reached by the camera, given the RGB component, it is created a Gaussian Distribution with a variance that is higher when the point color is closer to white. At the end the error given by the distribution is added to the distance measured.